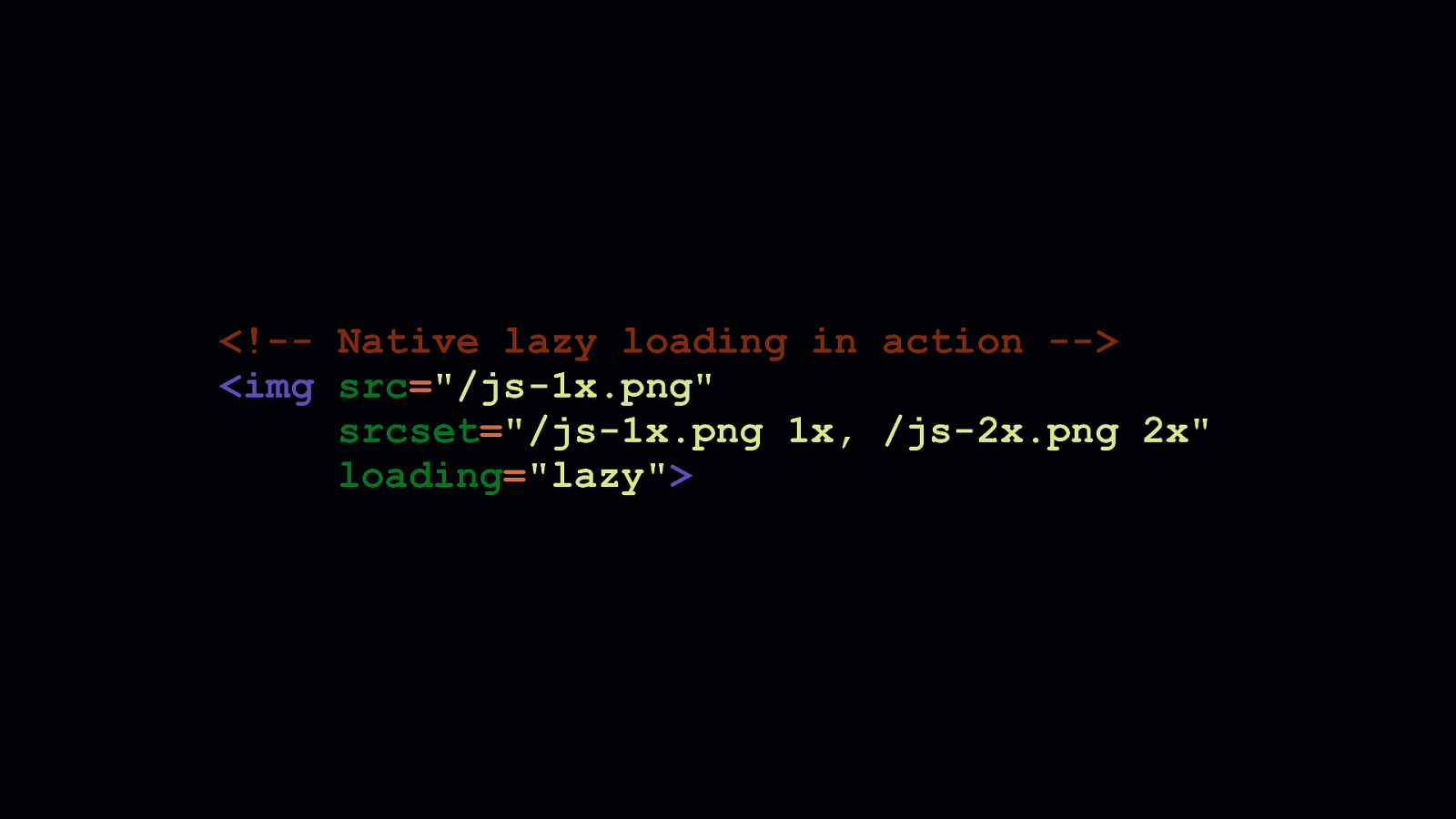

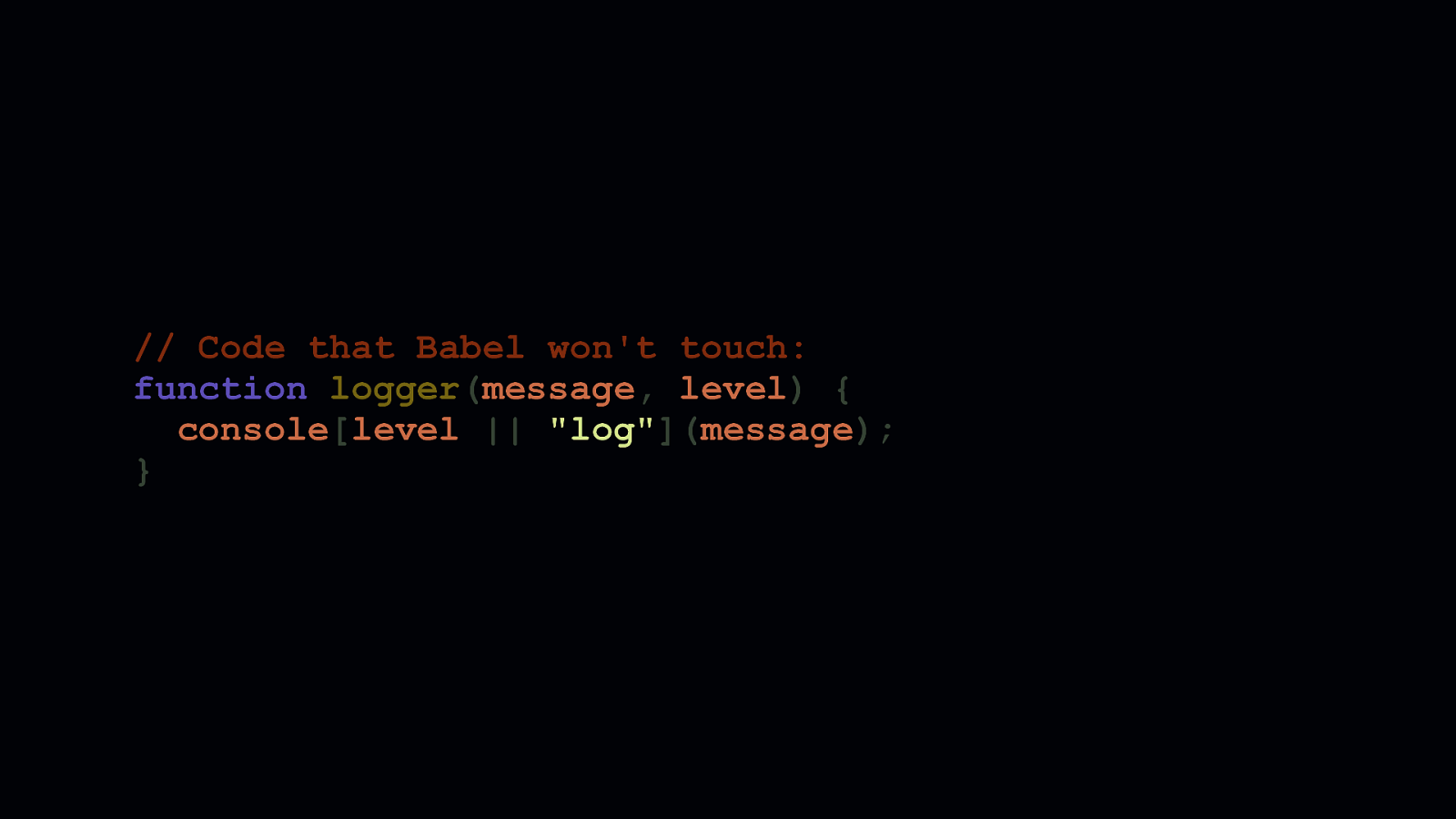

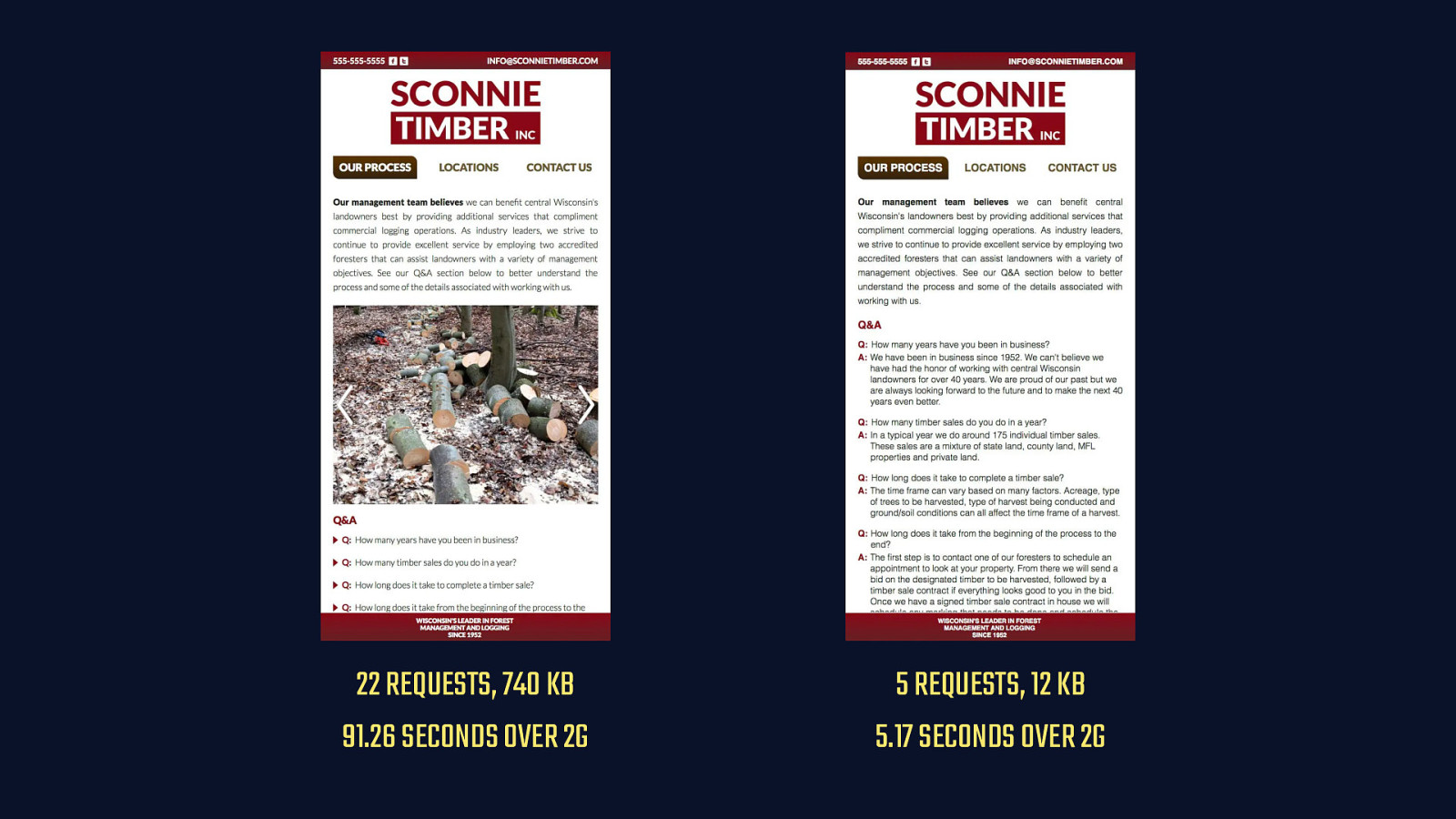

<!— Native lazy loading in action —> <img src=”/js-1x.png” srcset=”/js-1x.png 1x, /js-2x.png 2x” loading=”lazy”>

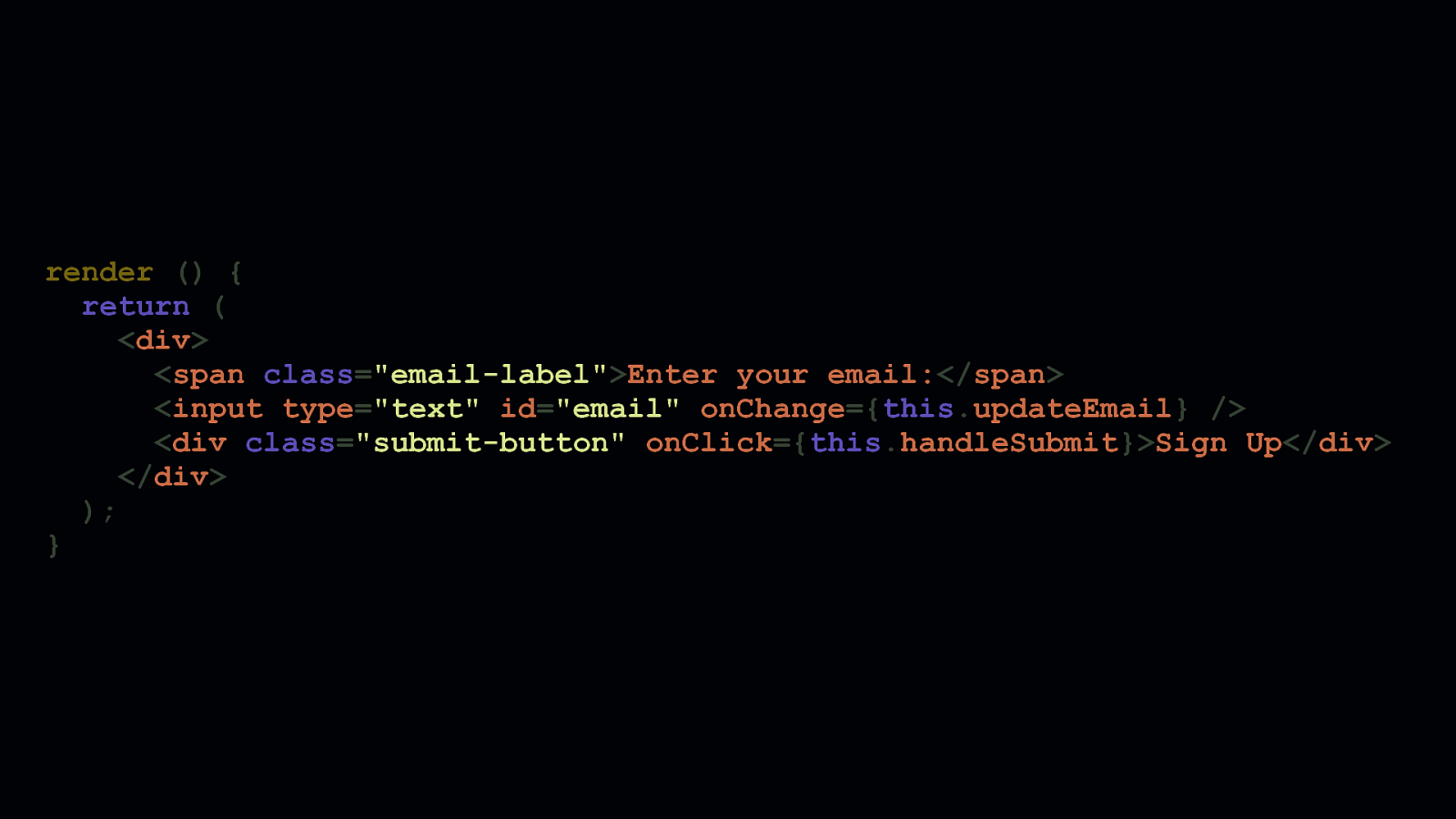

Regardless, none of these JavaScript solutions can be as robust as a native browser solution. Chrome has recently just shipped with native lazy loading, which you can turn on and try out in your chrome flags. With native lazy loading, an additional attribute is added to interfaces for <img> and <iframe> elements. This is the loading attribute. The loading1 attribute takes three values:auto,eager, andlazy. -autodoes what browsers already do today. And, if browser heuristics are optimized in the future, they may intelligently defer the loading of assets depending on network conditions. - A setting ofeagerwill ensure the content is loaded no matter what. - And a setting oflazy—shown here—will ensure that the content is lazy loaded. When theloadingattribute is set tolazy` for images, Chrome will issue a range request for the first 2 KB of an image in order to render the dimensions of the element. Then, using an observer in the browser internals, the content is loaded as it approaches the viewport.